Flex3D: Feed-Forward 3D Generation With Flexible Reconstruction Model And Input View Curation

Preprint

¹GenAI, Meta ²University of Oxford

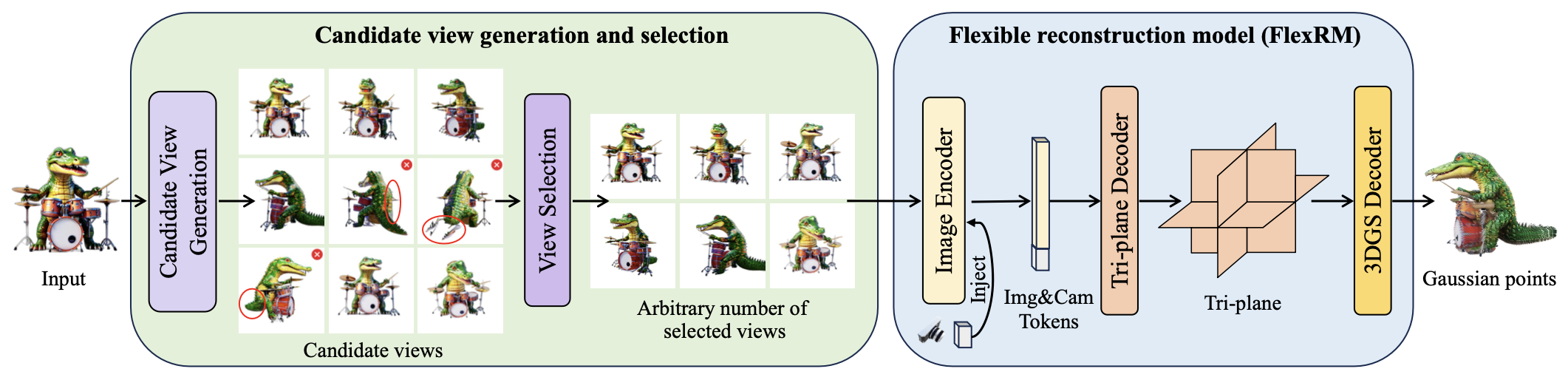

Summary: Flex3D is a two-stage pipeline that generates high-quality 3D assets from single images or text prompts.

Interactive Results

Explore generation results (Gaussian Splats) below.

Method

Acknowledgements

Junlin Han is supported by Meta. We would like to thank Luke Melas-Kyriazi, Runjia Li, Yawar Siddiqui, Minghao Chen, David Novotny, and Natalia Neverova for the helpful discussions and support.